ABOUT THE SYSTEM

Key features of our system

High-Resolution Analysis

High Robustness

High Accuracy

Large-Scale Image Analytics

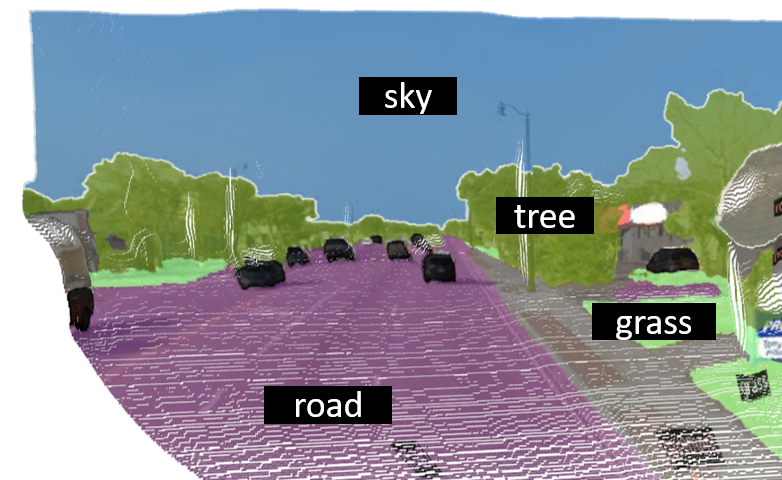

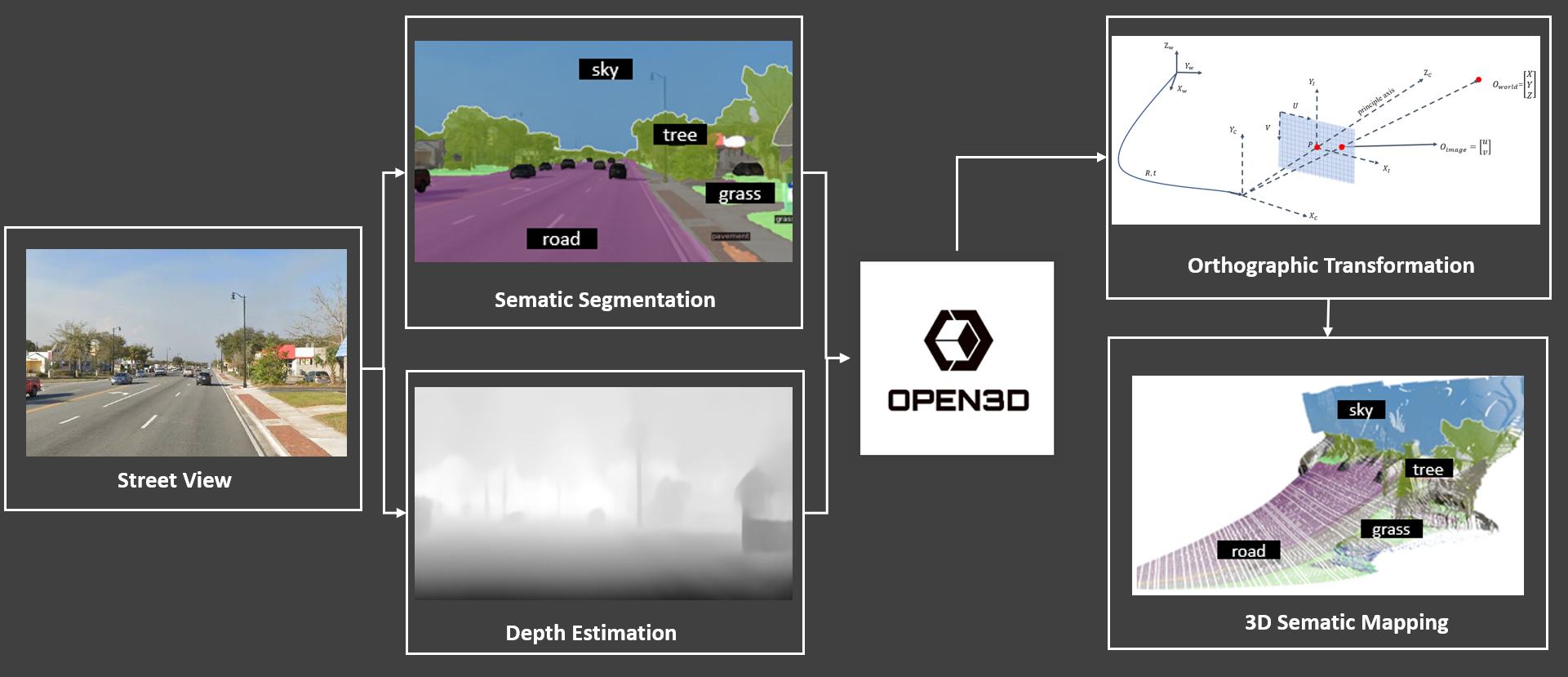

Semantic Segmentation

Depth Estimation

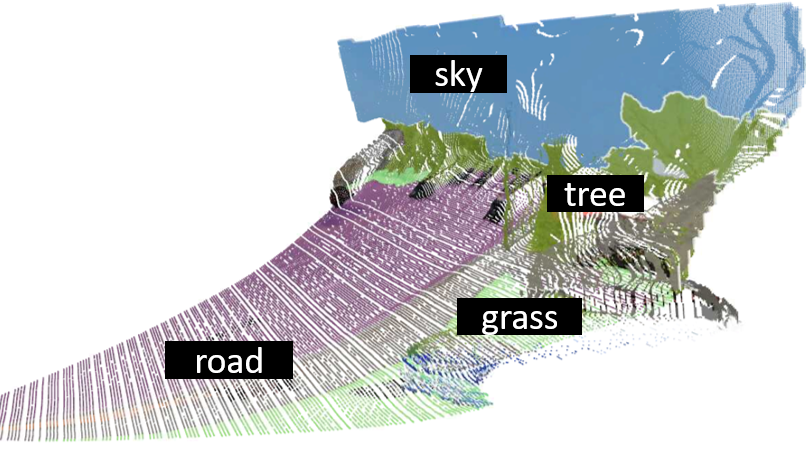

3D Driving Environment Estimation

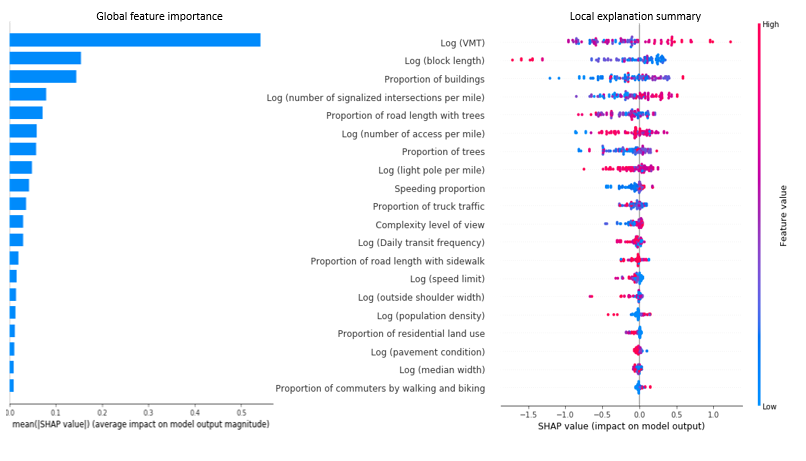

Fusion of Deep Learning Detection and Explainable Machine Learning

System Overview

This system, is to extract and understand driving visual environment along roads by using street view images (e.g., Google Street View Panorama) and videos from cameras on vehicles. Semantic segmentation and depth estimation are conducted first to get the clustering and depth information at each pixel in images or videos. Then, the orthographic transformation is applied to transfer the 2D images to 3D images, which reflect driving visual view in the real world. Based on the proposed system, the following information could be generated from street view images and videos:

- Surrounding environments such as trees, buildings, signs, and driving complexity levels

- Safety blind spots for human-driving and automated vehicles

- Urban feature composition and urban greenery

- Walking environment for pedestrians and cycling environment for bicyclists

The system can be applied for images and videos at the street-level which are collected at different types of road facilities, such as freeways, arterials, intersections, bike lanes, and sidewalks

Miles Roads

Street View Images

publication

Funded Projects

THE TEAM

The ones who makes this happen

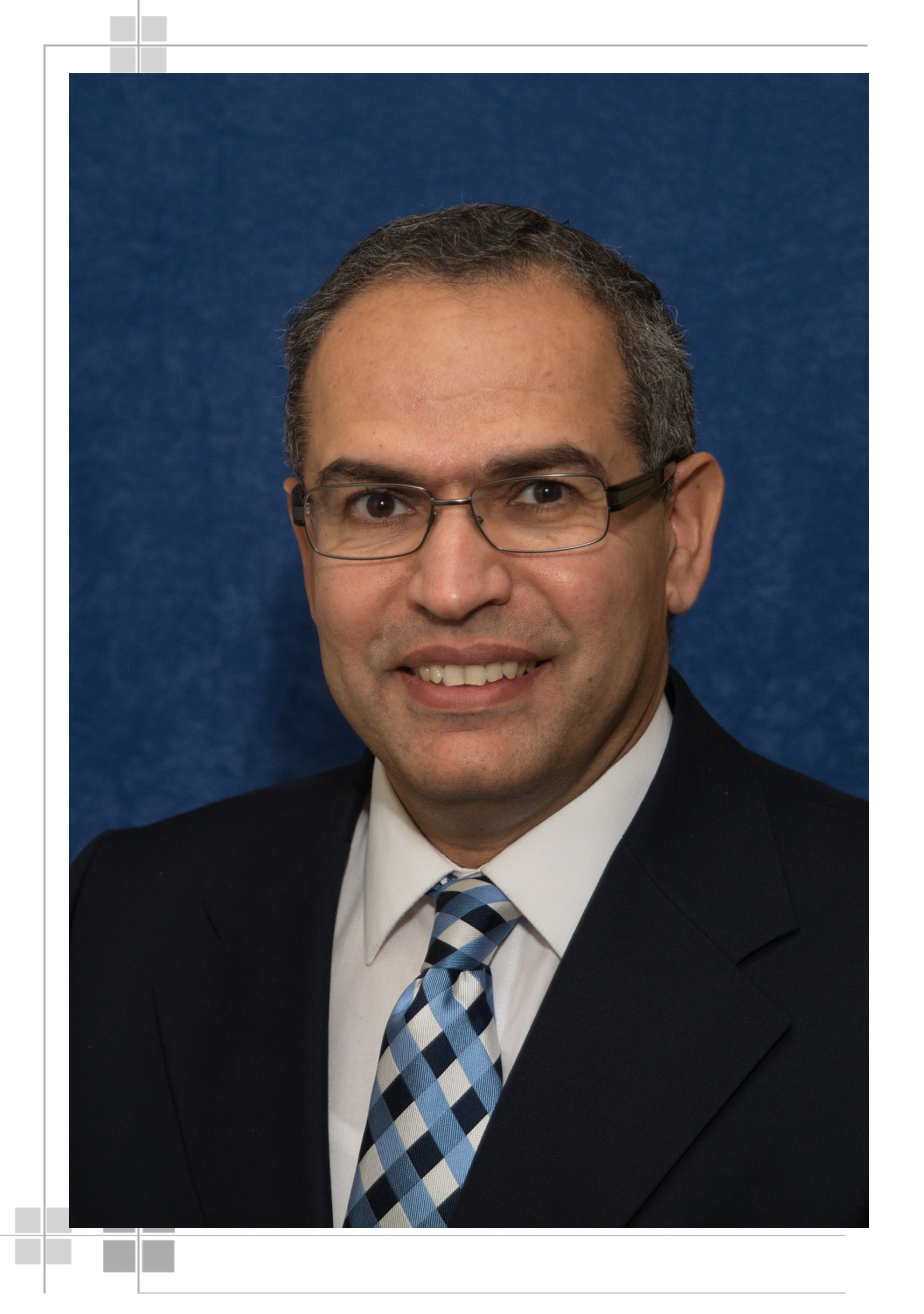

Dr. Mohamed Abdel-Aty

P.E., F.ASCE Trustee Chair

Dr. Yina Wu

Research Associate Professor

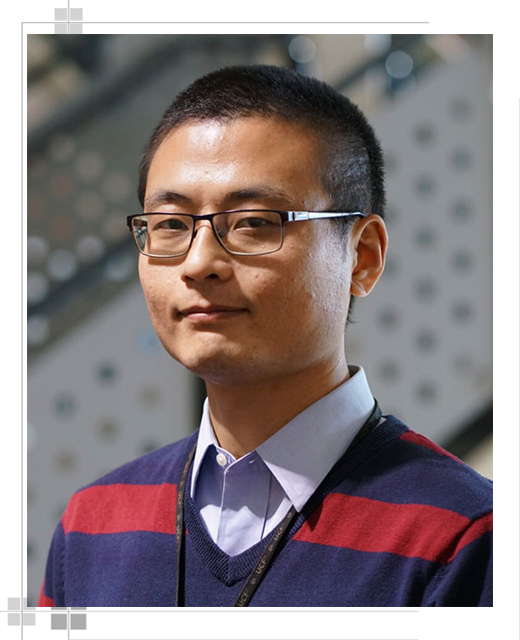

Dr. Qing Cai

Research Assistant Professor

Ou Zheng

Software Engineer

Our Performance.

Accuracy

Scalability Level

Robustness

OUR Example

What we've done for safety